On March 13th, 2024, the Artificial Intelligence Act was approved in the plenary session of the European Parliament. It is the most relevant step in the approval process. This legislation establishes a comprehensive regulatory framework for the use of AI systems in EU Member States, prioritizing safety, transparency and accountability.

The most significant aspect of the regulation is its focus on classifying AI systems according to their risk level. This strategy allows for more precise and effective regulation with robust safety standards and fundamental rights protection.

It was published in the Official Journal of the European Union in July 2024 and came into force in August 2024. However, its provisions are not immediately applicable and will be enforced according to the following timeline:

- February 2, 2025: Prohibition of unacceptable-risk AI systems.

- August 2, 2025: Governance obligations for General Purpose AI (GPAI). By this date, national competent authorities must also be designated.

- August 2, 2026: Full implementation of the remaining AI Act provisions.

Risk Level Categorization

The AI Act classifies AI systems based on their risk level into the following categories:

- Unacceptable Risk: AI systems that pose a direct threat to public safety, fundamental rights, or privacy. Their use is strictly prohibited, except in very exceptional cases.

- High Risk: AI systems that could significantly impact fundamental rights, particularly in health, safety, or employment. Their use is permitted only under strict safeguards and continuous monitoring.

- Limited Risk: This category refers to AI systems that interact directly with users, such as generative AI tools and chatbots. These systems must comply with specific transparency obligations.

- Low or No Risk: AI systems that do not fall into the above categories are considered low or no risk. While this classification is not explicitly defined, it is determined by exclusion. In this category, users must have the freedom to make informed, voluntary, and unequivocal decisions about using these technologies.

Biometric Recognition Systems: A Focus on Consent and Risk

The AI Act significantly expands the legal framework for biometric technology applications.

Previously, biometric data in EU legislation was linked exclusively to biometric recognition (e.g., GDPR defines biometric data strictly in terms of verification or identification). However, the AI Act introduces a broader perspective, covering the following applications:

- Biometric Recognition Systems:

- Biometric verification (1:1)

- Biometric identification (1:N)

- Biometric categorization

- Emotion inference systems

This distinction is crucial, as it clarifies that these functionalities are fundamentally different. For example, a recognition system is not designed for categorization, as its training is tailored for a specific purpose.

Modification of the Biometric Data Definition

| GDPR | AI Act |

|---|---|

| Article 4(14): “Biometric data means personal data resulting from specific technical processing, relating to the physical, physiological, or behavioral characteristics of a natural person, enabling or confirming their unique identification, such as facial images or fingerprint data.” | Article 3(34): “Biometric data means personal data obtained from a specific technical processing, related to the physical, physiological, or behavioral characteristics of a natural person, such as facial images or fingerprint data.” |

| Article 9(1): “Biometric data processed for the purpose of uniquely identifying a natural person.” | Article 3(35): “Biometric identification means the automated recognition of human characteristics of a physical, physiological, behavioral, or psychological nature to determine a person’s identity by comparing their biometric data with biometric data stored in a database.” |

| Article 3(36): “Biometric verification means the automated one-to-one verification of a person’s identity, including authentication, by comparing their biometric data with previously provided biometric data.” |

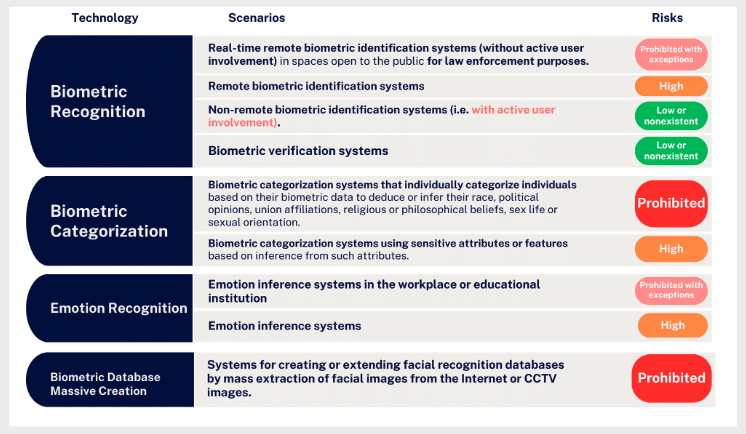

Classification of Biometric Systems by Risk

The AI Act evaluates biometric systems based on their impact on health, safety, and fundamental rights, acknowledging that risk levels vary by application.

Benefits of Artificial Intelligence in Businesses

The Proper use of Artificial Intelligence (AI) in businesses provide not only security but also efficiency and precision in responding to customer demands. Companies can ensure safer and more efficient operations with wholly-owned solutions that are continuously updated and trained.

This constant evolution in AI is reflected in technologies with high-quality certifications like NIST or iBeta, ensuring the reliability and efficiency of their applications.

Another crucial aspect is AI’s ability to quickly and securely verify identities, saving management costs and enhancing identity protection, a key factor in today’s business environment.

Despite implementation challenges like needing high-quality data and ensuring transparency and accountability, AI’s benefits are significant. A KPMG study indicates that over 50% of businesses consider AI essential for growth in the coming years.

Veridas Solutions: Security, Privacy, and Tier 1 Technology

At Veridas, we integrate Artificial Intelligence into our biometric solutions to ensure precision, functionality, and privacy by design. Modern biometrics has evolved towards Renewable Biometric References (RBRs), a revolutionary alternative to traditional biometric templates and passwords.

In a world where digitalization is advancing and cyberattacks are becoming increasingly sophisticated, authentication must be secure and privacy-focused.

The Problems with Traditional Methods

- Passwords are obsolete: They are vulnerable, costly, and create ongoing security issues. Phishing, credential theft, and poor password management undermine trust in digital interactions.

- Traditional biometric templates pose risks: While they enhance security compared to passwords, they remain reversible, interoperable, and irrevocable. If a biometric template is compromised, the user is permanently exposed.

RBRs: The New Era of Secure Biometrics

RBRs are designed to solve these challenges and offer an unprecedented level of protection:

- Multiplicity: The same face generates different RBRs depending on the system or context.

- Irreversibility: It is impossible to reconstruct the original facial image from an RBR.

- Non-interoperability: Only the system that created the RBR can interpret it.

- Revocability: If an RBR is compromised, it can be easily revoked and replaced.

Additionally,

- We utilize advanced neural network models (convolutional, residual, transformers) and regression techniques to optimize our solutions.

- We specialize in biometric verification (1:1) and non-remote biometric identification (1:N), categorized as low or non-existent risk under the RIA.

- We implement initiatives to improve the explainability and transparency of our systems.

- We were pioneers in Spain in conducting an AI Ethics Diagnosis, endorsed by Price Waterhouse Cooper.

The Future of Artificial Intelligence Laws: A Global Perspective

The European Union’s recent AI legislation sets a precedent within the EU and as a model globally, guiding other nations in forming their AI policies with a balance between innovation and ethical regulation.

Spain’s ‘Regulatory Sandbox‘ exemplifies EU members’ national implementation of the AI Regulation framework, creating a secure environment for AI development.

Concurrently, the UK’s ‘Online Safety Bill’ aims to enhance digital user protection. These regulatory efforts, including the EU’s legislation, indicate a global trend toward regulating emerging technologies, emphasizing the protection of individual rights and safety in the digital age.

The EU’s AI Act marks a significant international regulatory advancement, setting a standard for ethical and safe technology adoption and influencing future legislation worldwide.

This approach underscores the EU’s commitment to protecting its citizens while fostering responsible innovation, leading the way towards a more ethical and secure future in AI.