Artificial intelligence (AI) has transformed the way businesses operate, offering powerful tools to enhance security, streamline processes, and improve user experiences. However, the same AI advancements have also been exploited by cybercriminals to develop more sophisticated fraud techniques. Generative AI, in particular, has enabled scammers to create highly realistic fake content, manipulate identities, and execute financial fraud at an unprecedented scale.

As AI-driven fraud continues to evolve, organizations must implement robust detection and prevention strategies to mitigate risks. Machine learning, biometric authentication, and real-time fraud analysis are becoming essential tools in this battle. Financial institutions, businesses, and governments are increasingly investing in AI-based security solutions to counteract these emerging threats.

In this article, we will explore AI-driven fraud, its impact on various industries, and the crucial role of AI in fraud prevention. We will also examine the challenges associated with AI fraud detection and how companies like Veridas are leading the charge in protecting businesses and consumers from generative AI fraud. By understanding these risks and solutions, organizations can strengthen their defenses against increasingly sophisticated cyber threats.

Understanding AI-Driven Fraud

AI-driven fraud refers to fraudulent activities that leverage artificial intelligence to deceive individuals, businesses, and financial institutions. These scams often involve the use of machine learning algorithms to generate fake identities, manipulate digital content, and conduct highly convincing phishing attacks. Unlike traditional fraud, which relies on human intervention, AI fraud can be fully automated, making it more scalable and difficult to detect.

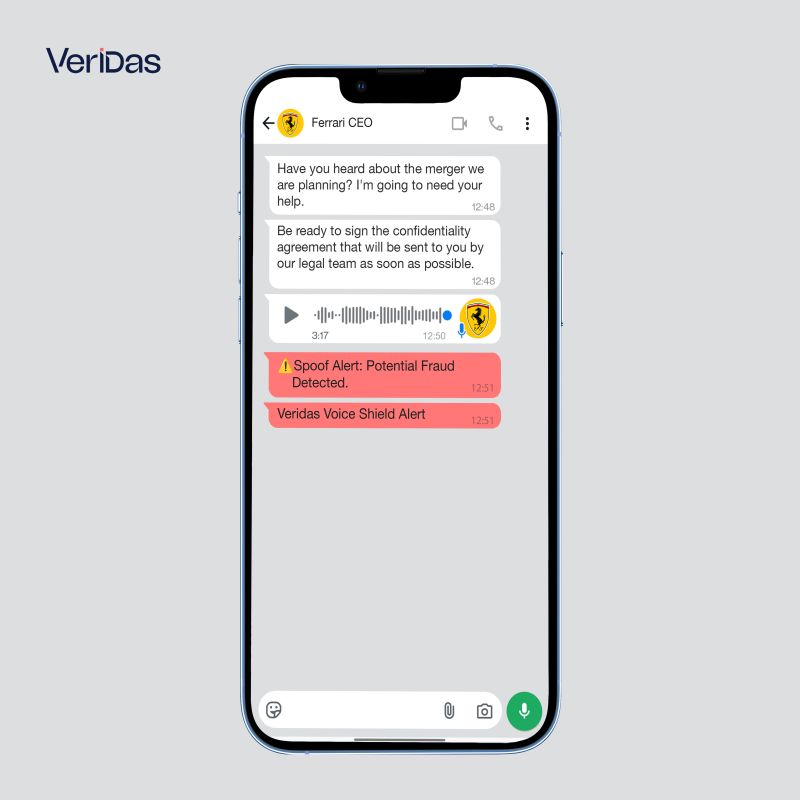

The sophistication of AI-driven fraud is particularly concerning because it enables cybercriminals to bypass conventional security measures. Fraudsters can use AI to generate deepfake videos and synthetic voice recordings that mimic real individuals. These AI-generated assets can be used to gain unauthorized access to financial accounts, execute business email compromise (BEC) scams, and create false identities for fraudulent transactions.

With the increasing accessibility of AI technology, fraudsters can automate their attacks, allowing them to target multiple victims simultaneously. This makes AI fraud a growing concern for financial institutions, e-commerce platforms, and government agencies. Detecting and preventing these types of scams requires advanced AI-based security measures that can differentiate between legitimate and fraudulent activity in real-time.

“At Veridas, we’ve been fighting fraud for years, adapting to every new challenge. Now, with Generative AI, the game has changed—fraudsters are more sophisticated than ever. That’s why our Advanced Injection Fraud Detection is a game-changer, helping businesses stay ahead, protect their operations, and keep customer trust intact.”

Javier San Agustin , CTO at Veridas.

What is AI Fraud?

The Role of Generative AI in Financial Scams

AI fraud encompasses a wide range of fraudulent activities that leverage artificial intelligence to manipulate, deceive, and exploit victims. One of the most concerning aspects of AI fraud is the use of generative AI to create realistic yet fake content, such as deepfake videos, synthetic identities, and AI-generated phishing emails. These sophisticated attacks make it increasingly difficult to distinguish between legitimate and fraudulent interactions.

In financial scams, generative AI is used to impersonate individuals, fabricate financial documents, and forge identity verification processes. For example, fraudsters can generate highly realistic deepfake videos or audios of a company executive, instructing employees to transfer funds to a fraudulent account. Similarly, AI-generated synthetic identities can be used to open bank accounts and apply for loans under false pretenses, causing significant financial losses.

The rapid advancement of generative AI has made these scams more accessible and effective, increasing their impact on financial institutions and businesses. To combat AI-driven fraud, organizations must adopt advanced fraud detection techniques, such as AI-powered behavioral analysis and biometric authentication, to verify identities and detect anomalies in real time.

AI and Fraud Prevention Strategies

Machine Learning for Fraud Detection

Machine learning is one of the most powerful tools in combating AI-driven fraud. By analyzing vast amounts of data, machine learning algorithms can identify patterns, detect anomalies, and flag suspicious transactions in real time. Unlike traditional fraud detection methods that rely on predefined rules, machine learning continuously adapts and evolves to recognize new fraudulent tactics.

One of the key applications of machine learning in fraud prevention is anomaly detection. AI systems can analyze user behavior and detect deviations from normal activity, signaling potential fraud. For example, if a user suddenly initiates a large financial transfer from an unusual location, the system can flag the transaction for further verification. This proactive approach helps prevent fraud before it causes financial harm.

Additionally, machine learning enhances fraud prevention by reducing false positives. Traditional security systems often block legitimate transactions due to rigid fraud detection rules. AI-powered fraud detection minimizes these errors by accurately distinguishing between normal and suspicious activities, ensuring a smoother user experience without compromising security.

AI in Banking and Identity Theft Prevention

The banking industry has been one of the primary targets for AI-driven fraud, leading to increased investment in AI-based security solutions. Banks and financial institutions are leveraging AI to monitor transactions, authenticate users, and detect fraudulent activities with greater accuracy. One of the most effective applications of AI in banking security is biometric authentication, which verifies users based on their unique physical traits.

AI-driven biometric authentication includes facial recognition, voice recognition, and fingerprint scanning. These technologies provide an additional layer of security, making it difficult for fraudsters to impersonate legitimate users. For example, a bank can require facial recognition verification before authorizing a high-value transaction, ensuring that only the account holder can complete the process.

Another crucial aspect of AI in banking security is identity verification. AI systems can analyze digital identity documents, detect signs of forgery, and compare them with biometric data to confirm authenticity. This prevents fraudsters from using stolen or synthetic identities to gain unauthorized access to financial services, significantly reducing identity theft and financial fraud risks.

The Future of AI in Fraud Detection

Challenges and Ethical Concerns

While AI plays a crucial role in fraud detection, it also presents significant challenges and ethical concerns. One of the primary concerns is the potential for AI-driven fraud to outpace fraud detection technologies. As fraudsters continuously refine their techniques, security systems must constantly evolve to keep up with emerging threats.

Another challenge is the risk of false positives and discrimination in AI-driven security systems. If not properly trained, AI models can unfairly flag certain individuals or transactions as fraudulent based on biased data. Ensuring fairness and transparency in AI fraud detection requires ongoing monitoring and ethical AI development practices.

Additionally, the widespread use of AI in fraud detection raises privacy concerns. AI-driven security systems rely on extensive data collection, including biometric information and behavioral analytics. Organizations must implement strong data protection policies to ensure that user data is handled securely and ethically while maintaining compliance with privacy regulations.

Comprehensive Protection Against Generative AI Fraud: Veridas’ Approach

The rise of generative AI has ushered in a new era of identity fraud, making digital security more critical than ever. What was once complex and expensive is now accessible, scalable, and cost-effective for fraudsters. In response to this escalating threat, Veridas emphasizes the importance of securing the entire identity journey, from digital onboarding to ongoing authentication.

Traditional security measures are increasingly inadequate against sophisticated AI-generated fraud. Fraudsters can bypass conventional defenses by injecting synthetic data directly into systems, rendering standard detection methods ineffective. To counteract these advanced tactics, Veridas has developed a suite of robust security measures. These include API security to prevent unauthorized access and virtual camera detection to block fake cameras, ensuring the integrity of biometric data capture.

By implementing these advanced security measures, Veridas aims to provide a comprehensive defense against the evolving landscape of AI-driven fraud. Their holistic approach ensures that every stage of the identity process is fortified, offering individuals and organizations enhanced protection in an increasingly digital world.

New Defense: Advanced Injection Attack Detection

- Ensures device authenticity – Identifies emulators, virtual machines, and automated bots before fraudulent activity can begin.

- Prevents large-scale fraud – Halts emulated attacks at their origin, stopping scammers before they infiltrate systems.

- Detects and blocks AI-powered fraud – Prevents synthetic data injection attempts that conventional security measures often overlook.

- Works alongside liveness detection – Enhances security across biometric verification methods, including facial recognition, voice verification, and AI document validation.

A New Standard in Fraud Prevention

- Verify the device. Real phone or emulator? Genuine user or bot?

- Stop fraud at the source. Catch AI-driven injection attacks before they enter the system.

- Secure the entire journey. Not just identity verification—the device itself.

Start detecting identity fraud with generative AI

Generative AI fraud is an evolving threat that requires constant vigilance and advanced security measures. As cybercriminals exploit AI to execute more sophisticated scams, organizations must invest in AI-driven fraud detection strategies to protect themselves and their customers. Companies like Veridas are paving the way for safer, AI-powered security solutions that detect and prevent fraud in real time.

By integrating machine learning, biometric authentication, and advanced anomaly detection, businesses can enhance their fraud prevention capabilities and mitigate financial risks. The future of fraud detection will depend on continuous innovation, ethical AI development, and collaboration between technology providers and regulatory bodies.

Understanding the risks and solutions surrounding AI fraud is essential for businesses to stay ahead of cyber threats. By embracing AI-powered security technologies, organizations can build a more secure digital environment and protect their assets from the growing dangers of AI-driven scams.